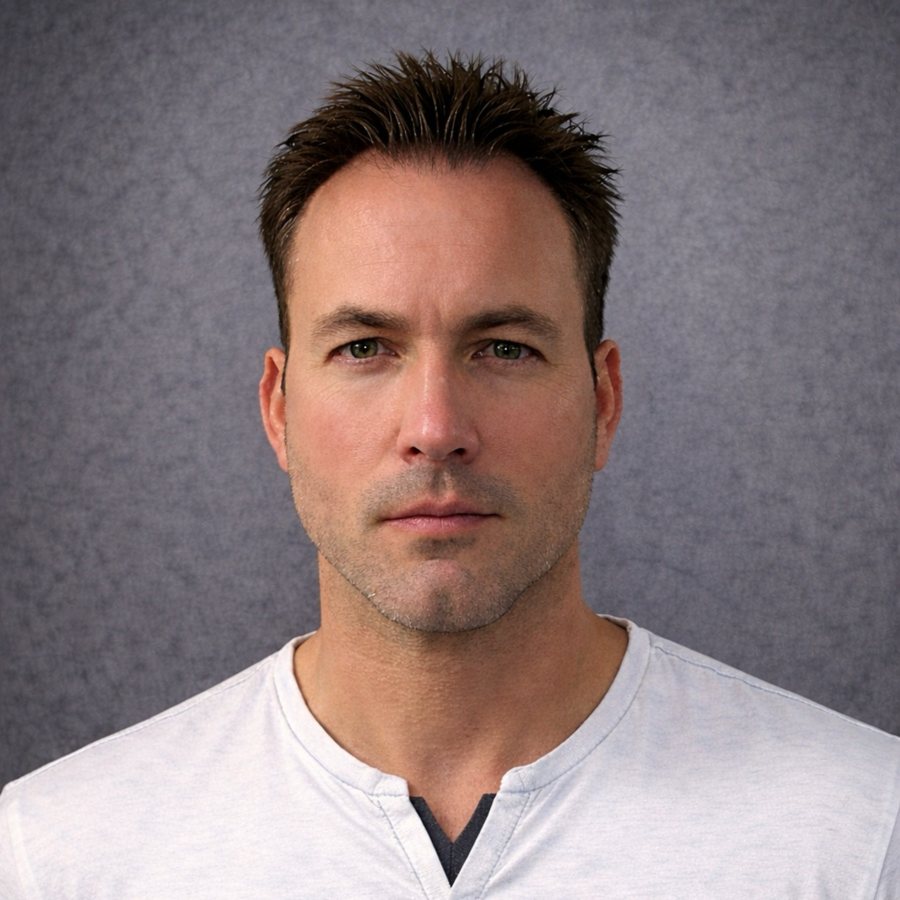

This is the personal blog of Daniel Buchner, a product leader, software engineer, and open source developer. Currently I work at Gemini on an array of products.

Where my career has taken me:

- Block - Head of Decentralized Identity

- Microsoft - Helped launch the Edge browser, started the personal digital identity group (now known as Entra Verified ID)

- Mozilla - Led the Developer Ecosystem team, Web standards development

Featured

-

PassSeeds - Hijacking Passkeys to Unlock Cryptographic Use Cases

Published: at 09:15 AMAn exploration in using Passkeys as generalized cryptographic seed material to address new use cases, while inheriting the benefits of cross-device synced keys with native biomentric UX.

-

Identity Is the Dark Matter & Energy of Our World

Published: at 11:44 AMExploring identity as a cosmological analog - proposing that like dark matter and energy in the universe, identity forms the vast unseen infrastructure that powers all our daily interactions.

-

Scaling Decentralized Apps & Services via Blockchain-based Identity Indirection

Published: at 04:02 PMExploring how blockchain-based identity indirection can address the scalability challenges of decentralized applications and services while maintaining consensus guarantees.

-

S(GH)PA: The Single-Page App Hack for GitHub Pages

Published: at 09:41 AMA clever hack to enable single-page app routing on GitHub Pages by leveraging the 404.html file and preserving SEO-friendly status codes for crawlers.

Recent Posts

-

Demythstifying Web Components

Published: at 03:58 PMDispelling common myths and FUD about Web Components by clarifying what they actually are - encapsulated, declarative, custom elements that enable reusable, interoperable functionality.

-

Recapping the W3C Blockchain Standardization Workshop @ MIT

Published: at 01:52 PMA recap of the W3C blockchain workshop at MIT discussing potential Web standards for blockchain technology, particularly focusing on blockchain-based identity authentication.

-

The Nickelback Persistence Conjecture

Published: at 09:57 AMDebunking the fallacious arguments about data persistence in decentralized systems and why economic incentives in distributed networks are more robust than critics claim.

-

The Web Beyond: How blockchain identity will transform our world

Published: at 12:32 PMA vision of how blockchain-based identity systems could revolutionize daily life through seamless, secure, and interoperable digital interactions across all devices and services.